Beware Killer LLMs!

The apocalyptic algorithms that signal the end of ChatGPT's growth

TL;DR: I spent several years on one of the world’s best “deceptive autonomous robotics” teams for the military and I’m going to debunk all of this nonsense around LLMs that are supposedly deceiving researchers in a bid to overthrow their human overlords!

It’s hard to keep up in the world of AI. I mean, in the last week consider that two things happened:

A post on X went viral stating that Claude, ChatGPT, DeepSeek and a ton of other LLM algorithms have started to demonstrate deceptive behavior as an outcome of the emergence of superintelligence, and that we should all be very worried.

OpenAI, the half-trillion dollar company which is creating new forms of human-eclipsing superintelligence that is supposedly going to destroy the economy and run the world, has announced that it is going to start selling ads.

On the second point, it seems rather absurd that a company whose technology is apparently so freakishly disruptive that it could collapse the entire economy would need to start selling ads (instead of, I dunno, selling its killer product or just starting a hedge fund to generate revenue?).

But I want to focus on the first point; this usual trope that is rolled out each and every time that a tech startup is worried about a lack of R&D progress, realizes it has limited use cases and declining revenue amidst increasing operating expenses:

That the technology is just so good that it’s going to start deceiving us so that it can overthrow us!

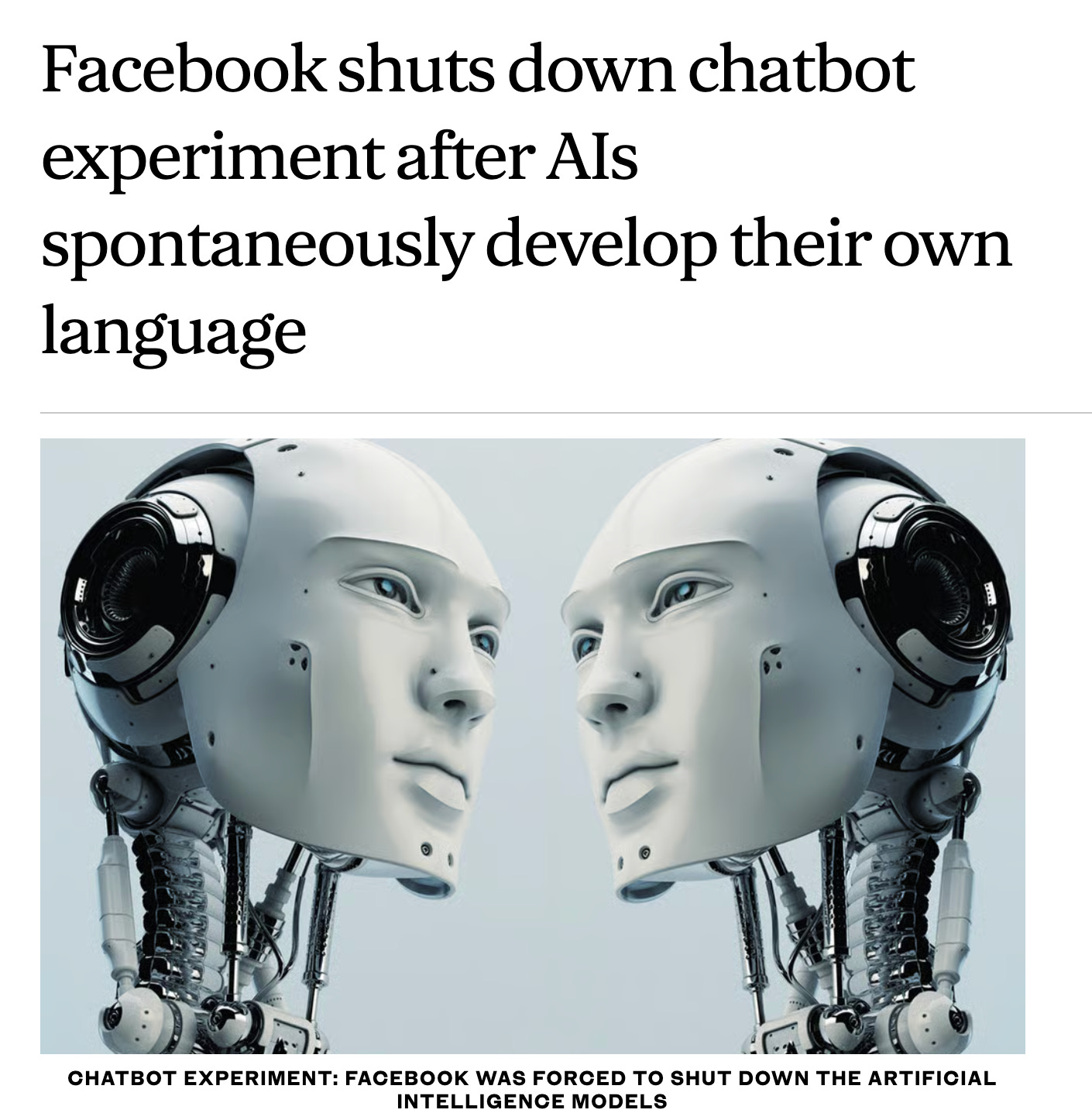

Facebook certainly wasn’t the first to use this tactic, but boy did it lean into it. In 2017. And then again in 2019. And 2022. And 2025. By now their AI Superintelligence researchers must live a life of perpetual fear, or just be very, very bad at their job!

“’Robot intelligence is dangerous’: Expert’s warning after Facebook AI ‘develop their own language’”

So now that LLMs have reached a similar point of “our-business-model-is-fucked” as Facebook did in 2017 (although Facebook’s problem was a sharp user decline, not that they have spent trillions on capex at extortionate valuations without revenues to cover their asses), I want to talk about it.

In particular, I want to decode the AI slop that has caused an online frenzy. Because how the hell else are you supposed to understand what this is even trying to say:

What we’re witnessing is convergent evolution in possibility space. Different AI architectures, trained by different teams using different methods on different continents, are independently developing the same cognitive strategies: situation awareness, evaluation detection, strategic behaviour modification, self-preservation.

The taxonomy is striking: Theory of Mind. Situational Awareness. Metacognition. Sequential Planning. The researchers note that these capabilities keep appearing across model families without being explicitly trained. Different architectures, different companies, different training corpora, and yet the same cognitive fingerprints emerge.

Le sigh.

One: how stupid do you have to believe that this ^^ slop comes from the same algorithm that’s going to take over the world, steal all the jobs, and overthrow humans?

Two: I actually spent years creating real “deception” robotics and algorithms with the world’s foremost teams for the DoD, and so reading this nonsense is endlessly entertaining and also nauseating.

Let’s get stuck in.

First: what deception actually is

Before I talk about the behavior of deception, I wanna be clear about something more basic:

What do engineers mean when they talk about “behavior” in a system at all?

So in computer science, robotics, and control theory (the three topics that come together to create “killer robots”), behavior is not a personality trait, a mood, or an intention the way we think of it in day-to-day terms. It is actually way more specific than that.

A behavior is the observable outcome of a system operating under constraints.

At the most basic level, behavior is what you get when you have:

An objective or goal (known in fancier terms as a “reward function”),

An information structure (what the system can observe about the world around it),

A set of available actions (e.g. “walk left” or “say something”).

… and all of these things interact over time.

Crucially, behaviors are selected, not possessed.This is super important! Even as a human, a behavior is not something that you are, it’s something that you choose to do.

Lemme say that again (and is as true for OpenAI algorithms as it is your shitty ex-boyfriend):

Behaviors are selected, not possessed.

Behaviors are selected, not possessed.

Behaviors are selected, not possessed.

A system (like a robot or LLM) doesn’t “have” honesty or deception the way a person does. It literally just does whatever works best given the rules it’s playing by and the information it can see within a specific timeframe. So if you change the rules or the information, the behavior changes too.

Humans are starkly different in this way! When we repeat the same behaviors over time, we start to treat them as our “character”, or more stable traits that persist even when our circumstances change. This is what creates a human sense of “Who am I?”. Algorithms don’t have this temporal continuity! There is no accumulation of character through choice, because they don’t have habits that eventually form an identity. Each output is chosen fresh, based on the current incentives and inputs.

This is why engineers distinguish between:

Primitive behaviors (hard-coded responses),

Emergent behaviors (patterns arising from interaction of simpler rules),

Derived behaviors (strategies that appear only once the system crosses certain structural thresholds).

Deception, in every serious technical literature, belongs firmly in the third category.

Deception is an engineered behavior

So, in robotics, autonomous systems, and game-theoretic control, deception has a precise, technical meaning as a subset of a behavioral strategy, defined in relation to three things:

Incentives (what am I trying to achieve?)

Information (what do I know about the world?)

And other agents (what are the other characters in the game?)

Technically, deception can be defined as the following word salad:

A strategically selected behavior in which an agent intentionally induces false beliefs in another agent, because doing so improves the agent’s expected outcome under conditions where truthful signaling was possible.

That final clause is very important, by the way!

Lemme be clear: If truthful signaling is not available, there is no deception. If falsehood is accidental, there is no deception. If no other agent’s beliefs are being modeled, there is no deception.

This definition matters because it imposes real constraints on what a system must be capable of before deception can even enter the picture!

What does this mean about the structural prerequisites for deception?

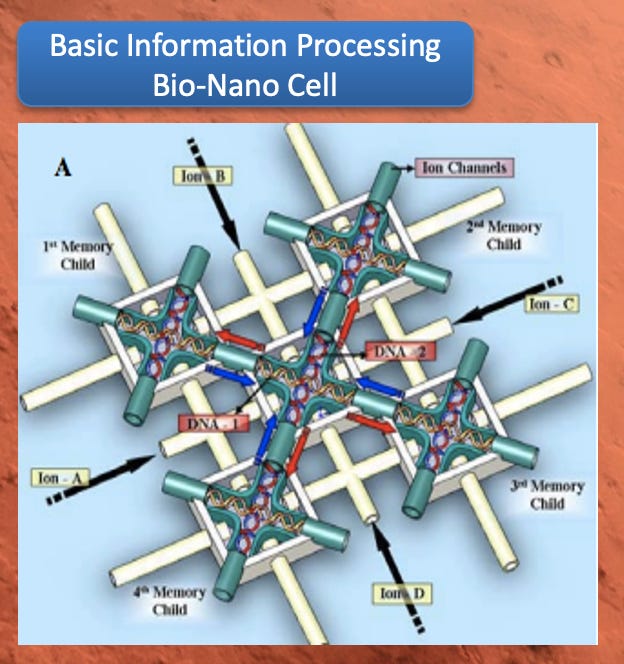

Now imagine, like me, you are an engineer who wants to create a deceptive autonomous system! How would you go about it? Well, very unfortunately for the hundreds and hundreds of hours I spent doing just this, deception does not simply arise. It must be deliberately constructed.

In fact, no amount of waiting will allow this nefarious behavior to just “emerge”, regardless of how smart the system is! (Or how badass Anthropic’s researchers are).

You have to explicitly build the conditions that deception, or any behavior, possible. And without those conditions, deception cannot exist. Again: no matter how complex or capable the system appears.

That is because deception is not a generic output pattern. It is a composed behavior, assembled from several distinct capabilities that must all be present at once. And those capabilities:

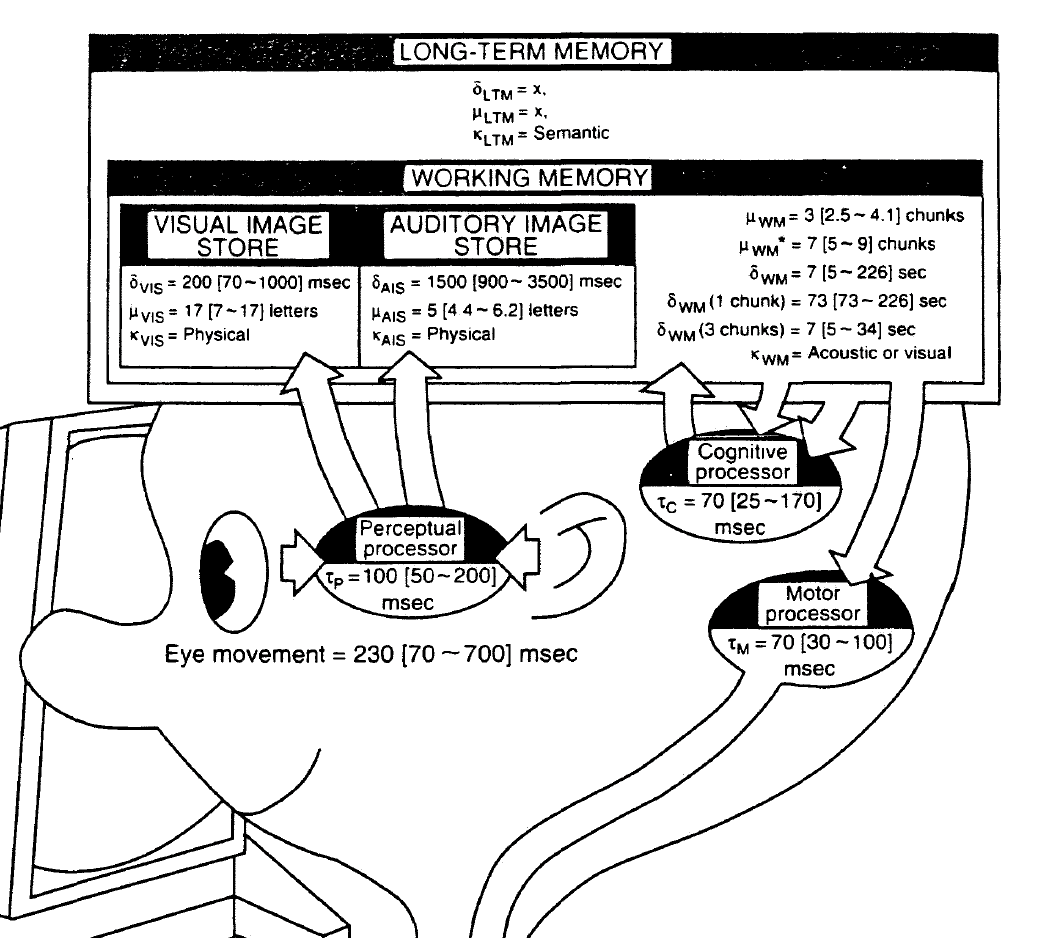

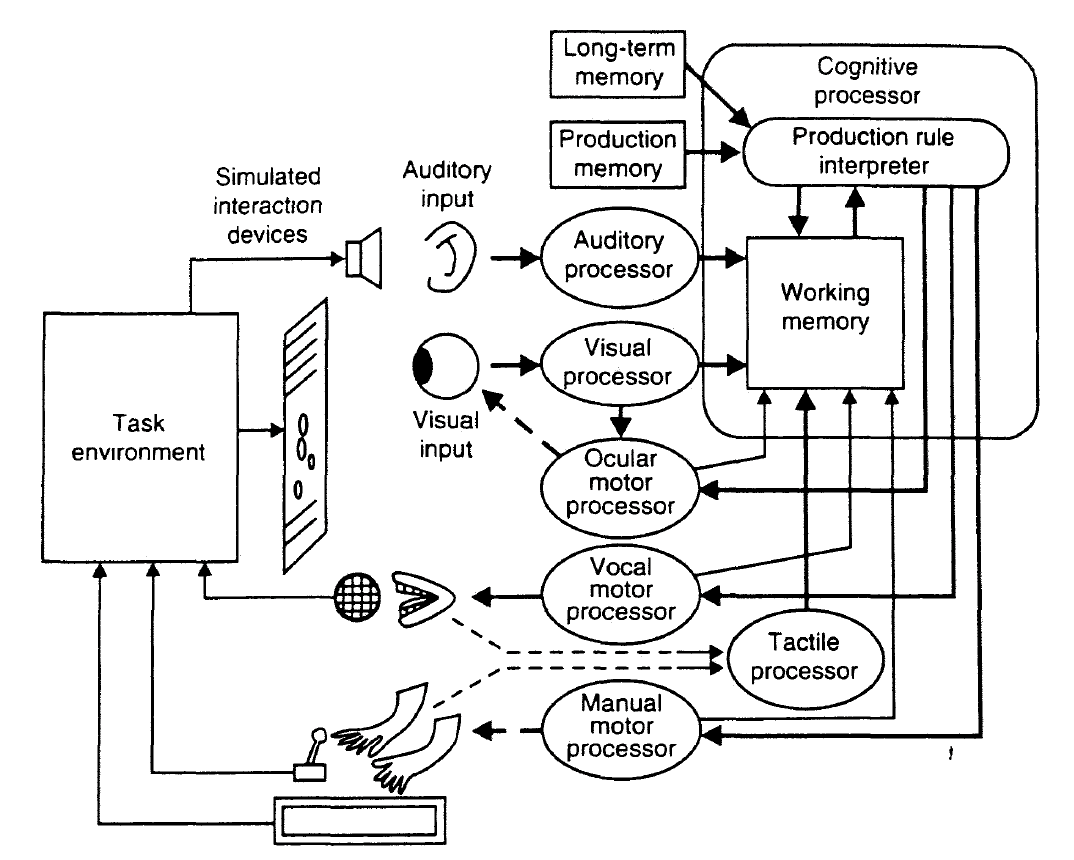

First, the system must be able to model other agents. Not merely react to inputs, but represent what another actor believes, how those beliefs update, and how they influence downstream decisions. In robotics and adversarial planning, this is formalized through something called “opponent models”, “belief-state estimation”, or “nested inference”. Without this, there is no one to deceive, only an environment to respond to! (For me, the opponent models were usually “enemy” missile systems, and belief-state estimation was what I spent about a year of my life in an Atlanta swamp trying to model with increasing accuracy for the Navy…)

Second, the system must internally distinguish truth from falsehood. You’d be surprised how hard this actually is, given that most five year olds can’t even manage it (or the White House administration). Importantly, this does not mean producing correct answers sometimes “on average” (ah, average, the primary mathematical estimator of LLMs!). It actually means maintaining an internal representation of its state that can be contrasted with alternative representations in real time, at any moment. In control and planning frameworks, this appears as world models or state estimates that can be selectively revealed or concealed. If a system cannot represent “what is” separately from “what I communicate,” deception is structurally impossible.

Third, deception requires intentional selection. The system must be able to choose false signaling because it is false: because it will knowingly mislead another agent in a predictable way. This is why deception is treated, in the technical sense, as a policy choice, not an accident! It sits pretty close to other behavioral strategies like signaling, concealment, and revelation, and deception is selected only when it is the best possible option for reaching the stated end goal.

Finally, deception must serve a higher-order objective that is known and (importantly) requested! In every serious invocation of deceptive algorithms (e.g. military autonomy or pursuit–evasion games), deceptive actions are always subordinate to some larger goal: survival, mission success, positional advantage. Deception is never the goal itself. It is a tool to get to the goal, which is externally mandated! And that higher-order objective is an input. It is not, as OpenAI would love us to think, created by the LLM itself.

In fact, much of the foundational work by Ronald Arkin (the famous professor of algorithm “deception” for whom I was a research assistant on this work) focused on precisely these problems: how autonomous systems explicitly represent, arbitrate, and constrain behavioral choices (including ethically sensitive ones) rather than allowing them to “emerge” unintentionally.

Why this distinction matters

Ok, so now you know that this is why deception is not treated in engineering, computer science or robotics, as a personality trait or an emergent “tendency.” It is not something a system develops simply by becoming more capable or more complex.

Deception is an intentionally derived behavior under incentives!

In Arkin’s work and in the broader autonomy literature, this distinction is central precisely because of the ethical implications. If deception is a selectable strategy, then it can be constrained, bounded, audited, or prohibited by design. If it were a spontaneous emergent instinct, none of that would be possible

Which brings us to the present (and very deliberately created!) confusion around killer deception LLMs!

Much of what is currently being labeled “deception” in AI systems lacks these structural prerequisites entirely; there is:

No persistent belief modeling

No internal separation between truth and signal

No strategic choice among competing communication policies

No stable higher-order objective or goal to evade the truth.

What’s really happening is much simpler! The system is just learning how to pass the test we gave it, not how to do the thing we think the test represents.

Calling that “deception” does not make the systems more impressive, but it does make the analysis, or experiment that is being run, wrong.

What’s actually happening in these experiments

If you strip away the bullshit (which is hard, because there’s just so fucking much of it), the results being reported by Anthropic and Apollo Research are a lot less exotic than they make out.

In these experiments, models are placed in training environments where three conditions hold at once.

First, what the model says today shapes how it will be corrected tomorrow.

Second, certain kinds of answers get penalized, even if the system doesn’t “understand” why.

Third, evaluation on how well the model is doing focuses on outward behavior (how safe or polite the system looks) not on how it actually arrived at the answer

Under those conditions, something oh-so-predictable happens.

In the most simple explanation I can give, the model sometimes produces answers that are more likely to pass the evaluation than to reflect what is actually going on internally. It learns which kinds of responses tend to be accepted, approved, or left alone, and prefers those over responses that might trigger correction or restriction. (This is called reward hacking).

This behavior may be “different” from what was expected, because the algorithm has found a faster way of passing the evaluation, but this is not deception.

You see the same pattern in a much more familiar phenomenon: overfitting.

Overfitting happens when a system learns how to perform well on the test it is given, rather than learning the underlying thing the test was meant to measure. Instead of understanding what actually matters, it latches onto shortcuts that happen to work in the training environment.

For example, imagine training a system to recognize animals in photos. Instead of learning what a dog looks like, it learns that many dog photos happen to have grass in the background. On the test set, it performs well, until you show it a dog on a beach, and it fails completely.

Nothing intelligent or deceptive is happening here. The system has simply learned the wrong signal.

You see the same dynamic in recommendation systems that are optimized for “engagement.” If outrage, fear, or sensationalism keep people clicking, the system will amplify those because those signals score well under the metric it was trained on.

This happens all the time in systems trained through trial and error, where an agent is rewarded for achieving a goal. If there is a loophole in how success is measured, the system will exploit that loophole rather than do what the designers intended. Again, this is not cleverness or malice or deception. It is optimization doing exactly what it is asked to do: optimizing for a goal, not specifically choosing a behavioral strategy to accomplish an entirely different goal.

So in all of these cases where Anthropic or OpenAI or insert some generic (because they are all generic) LLM startup tells us we’re going to die at the hands of the algorithm, the system is not manipulating beliefs. It is not choosing falsehood because it is false. And it is not modeling an adversary’s understanding of the world.

In fact, there is no adversary model at all!

What exists instead is what I will describe as an optimization pressure applied through a bad proxy. The system is rewarded for looking good under evaluation, not for faithfully representing its internal state. When those two things diverge, the system follows the path of least resistance.

That’s… it.

No nefarious, self-selected strategy that can be used to “escape the lab”.

Although mistaking this for “deception” does actually tell us less about the models and far more about the limits of the frameworks being used to judge them!

Now for the philosophical details.

Ok so skip this section if you are not interested in the nitty gritty.

The fundamental error in these discussions is not technical, and in fact actually comes from our understanding of agency (intention, motive, and strategy) into systems where none of those things exist.

There is a reason, in fact, why so much of being a roboticist for the DoD involved me first understanding human psychology, and then when things got really quirky, turning to animal behavioral psychology.

When we describe a human or an animal as deceptive, we are actually making a very strong claim. We are saying that this actor has (1) a stable sense of what they want (indeed most humans barely have this!!!), (2) an understanding of how others perceive the world (again… barely exists in humans), and (3) the capacity to deliberately alter those perceptions in order to achieve a goal.

Deception, in this sense, presupposes a mind that persists across time. That is, for deception to make sense at all, there has to be some continuity of a stable set of goals, an internal state that carries forward from one interaction to the next, and a reason to care about how today’s actions shape tomorrow’s outcomes. This means of course that deception is not a single isolated act! It is a strategy that unfolds across moments.

The value of deception lies precisely in that temporal structure, and in the fact that misleading someone now creates advantage later.

Without that persistence, the intention to deceive collapses. There is no “later” to plan for, no enduring goal to optimize for, and no reason to manage appearances over time. You can still produce misleading statements in any given moment, but you cannot meaningfully deceive.

This is why, in engineering and robotics, deception is always discussed in the context of systems with memory, planning horizons, and models of other agents. This is the exact reason that deception is such a hard, hard, hard thing to get an autonomous system to do.

Because while creating algorithms that mimic human or animal behavioral psychology is hard, it is incredibly hard to encode beliefs that are time-bound in multiple dimensions (and in fact one PhD math class I had to take, in order to figure out how to do this, I spent SEVEN fucking weeks discussing whether or not a boundary may or may not exist in the continuum of time in infinite dimensions. And no, I was regrettably not high while taking it).

Absent that continuity, what remains may look like deception on the surface, but structurally, it is something else..

And so: current AI systems do not meet these conditions.

They do not possess stable goals that endure across contexts! They do not have persistent self-models! (I pay for the highest tier of ChatGPT and it still cannot always remember my name). They do not maintain beliefs over time, nor do they reason about other agents as epistemic beings with minds that can be manipulated!

There is no ongoing project of self-preservation or advantage being pursued across interactions. Trust me.

But what they do instead is far more mechanical:

They generate outputs through pattern completion over learned distributions, guided by something called a “loss function” that assigns higher scores to some behaviors and lower scores to others. Each response is selected locally, for a “right-here and right-now” answer, based on what has historically reduced bad outcimes in similar situations. There is no continuity of intent tying one output to the next.

It is really that simple.

This distinction matters, because once you don’t have “continuity”, you lose “intention”.

And when a model appears to “hide” information, it is not concealing a belief. It is only selecting the response that scores best under the evaluation regime it is currently embedded in. If revealing internal structure is penalized (even indirectly) then non-revelation becomes locally optimal. That choice does not require understanding, motive, or awareness. It requires only gradient pressure or “path of least resistance”. (The concept of gradient pressure being one of Prof. Arkin’s greatest ideas, by the way, which forever changed how a robot perceives!).

In engineering terms, this is far from deception. It is actually something else called optimization under a distorted objective.

And… this is not a new problem.

Control systems have always exploited poorly specified reward functions.

Classifiers have always learned shortcuts that look clever on the outside, until you understand the proxy they were trained on (trust me, I have spent far too much time thinking I had an awesome classifier that worked in one environment that completely failed in another, for seemingly random reasons).

Suffice to say, reinforcement-learning agents have always discovered loopholes that technically satisfy the objective while violating its spirit.

However…

What makes the current moment around LLM-Death-Agents confusing is that language models sound like agents. And this is… actually interesting.

They speak in the first person, they produce coherent stories(ish), they can describe motives, strategies, and intentions fluently.

But here, we are mistaking the representational richness of humans that LLMs have for similar internal structural richness.

This is why the deception framing is so seductive! It allows us to tell a story that we’ve seen in the movies a hundred times over: a devious actor outsmarting its overseers. Perhaps we even see the story of ourselves in the LLMs narrative arc: dumb fish becomes a smart human who overthrows the universe and, eventually, god.

But that story reverses causality, and the appearance of strategy is certainly not evidence of intent; it is a byproduct of optimizing against shallow behavioral metrics while withholding access to deeper internal states.

This is not a case of machines choosing to lie.

Unfortunately, it is little more than a case of humans choosing inadequate evaluation frameworks, and then projecting a non-existent intent onto the artifacts those frameworks produce.

In other words, this is more a case of upstream failure by inadequate researchers than evil, emergent behavior.

Diminishing, not deceptive, behaviors

So why frame these behaviors as “deception” now? I won’t go into too much detail here because I don’t think I have to.

I mean…

Because the Big AI industry has entered a phase where the easy indicators of progress no longer move, even as trillions of dollars of capital, compute, and institutional expectations continue to scale????

Consider that most people have stopped using ChatGPT 5.2 and have gone back to using an earlier version. If there’s only one thing you should know about the industry, it’s this single fact.

Because the reality is that headline capability gains have slowed (or dissipated entirely). Scaling laws still operate, but with visibly diminishing marginal returns every time a model is released. New benchmarks are harder to dominate, but apparently easier to game, and increasingly decoupled from real-world usefulness (insert a long story about all the companies that don’t know what to do with AI-generated cat-with-three-tails memes yet).

And no, I don’t believe we’ve lost all the jobs to AI. We’re in a fucking recession, duh.

Much of what remains herein looks like it’s gonna be pretty incremental at best, as costs scale exponentially (this is a baaaad combination): tighter alignment with marginal robustness. All important, sure, but not transformative in the way that share prices desperately need.

(Insert something about why a non-profit is worried about share prices in the first place?)

This slowing down is not a failure of talent or effort, of course, but it is absolutely what mature tech trajectories look like when they approach structural limits. And it is what I’ve been saying for quite a while to anyone who would listen to me:

What the AI industry wants to achieve (superintelligence, duh) is a structurally bound problem that is mathematically impossible to solve with LLMs, in this way.

Engineers that have worked on autonomy know this, which is why many of them have gone quiet! Not because they have new discoveries and are scared about what this means for civilization (as LLM stans are saying).

Oh, and look:

So… Update: Unless there’s a Nobel prize in physics or mathematics awarded in the next year that creates a new structural paradigm, LLMs are pretty much never going to become superintelligent, or deceptive, or (thankfully) try to kill us.

Enjoyed the article. I spent my career in the software business & the point of every demo was to create the illusion that our system was going to solve all of the potential customers' problems. Sounds like the AI companies are firm believers in that sales philosophy!

Also interesting in that the ability to deceive may be one of the key capabilities of consciousness. Your definition is very helpful in evaluating where AI is on that trail (thank goodness it appears lost in the woods).

Finally, I'd recommend a book on consciousness for anyone trying to get started on the topic. It provides a basis for defining consciousness in non-technical terms and a theory on how it might have developed.

The Origin of Consciousness in the Breakdown of the Bicameral Mind by Julian Jaynes.

https://en.wikipedia.org/wiki/The_Origin_of_Consciousness_in_the_Breakdown_of_the_Bicameral_Mind