Economics: Elegant, Precise… and Useless?

How economics got stuck on Newton’s chalkboard while the world moved on.

Hi, Sinéad again. Sunday night and I can’t stop throwing out economics/ markets hit pieces. So here’s one more!

I don’t often talk about the genre of economics that I’m most passionate about: econometrics.

So in this dispatch, I’m going to let you in on, and attempt to explain, a suuuper fierce-turned-nasty (but also hilarious) fight happening on Twitter this weekend between the biggest names in economics out there.

[Come for the gossip, stay for the primer on the subject of economics advances]

Image: FT

But first, I should say: I had worked in engineering academia (where people are generally lovely) for several years before I moved into economics academia (where people can be assholes).

And I remember my first seminar, in which I watched a famous and brilliant economist outline his research. He didn’t get past the second slide before the mud-slinging (actual shouting!) started, and did not finish. He never got to the punchline of his paper.

It was the first time I realized just how cruel, and mean, and catty the field of economics could be.

So that is the landscape in which we find ourselves this weekend!

Here we go:

A couple of days ago, the Financial Times published what looked, at first glance, like a harmless mid-morning essay: an economics columnist reminiscing about the trauma of graduate school and asking whether the field had fallen in love with the wrong kind of mathematics.

On the surface, it was the sort of piece you’d expect to skim over and promptly forget. Instead, it touched off a minor (major?) academic riot. Econometricians rushed to defend their integrals, behavioural economists called for a ceasefire on utility functions, and hedge fund quants are screaming into the void at anybody and everybody.

As of this evening, the profession still resembles a quasi-violent group chat.

Why the uproar?

Because the column said out loud what many insiders whisper: economics still thinks like Newton. The discipline clings to a 17th-century toolkit (calculus, optimisation, equilibrium) as though the global economy were a tidy system of levers and pulleys rather than a volatile, networked, crisis-prone mess.

And here’s the thing: the column was half right.

Sorry… I had to.

Yes, economics is stuck on Newtonian maths. But the deeper problem isn’t just outdated equations.

The bigger story that Tim Harford (for whom I actually feel quite sorry for right now!) failed to grasp? Is that the incentives, the institutions, and the profession’s refusal to admit that the world isn’t smooth or solvable.

That’s the story that’s really worth telling. Because when the shit models that the industry uses fail (ummm, 2008?), the fallout doesn’t just stay in the group chat. It impacts all of us.

What “Newtonian Maths” Means in Economics

So what exactly is this “Newtonian maths” everyone keeps talking about?

At its core, it’s the toolkit of calculus: maximisation and optimisation, subject to constraints. The same math Newton soooo long ago used to describe the trajectory of a cannonball has been repurposed to describe the trajectory of a consumer’s grocery budget.

Easy math, applied to insanely complex phenomena.

This framework, though, has obvious appeal. It’s elegant. It’s solvable. It makes lovely graphs for PowerPoint slides. And crucially, it’s publishable. You can prove theorems with it, write clean equations, and churn out papers that look like physics.

But there was another, less glamorous reason Newtonian maths took over: compute power.

Until very recently, computers (and before them, humans with chalkboards) could only handle models that were mathematically “tractable.” That meant smooth curves, closed-form solutions, and systems that converged neatly to equilibrium. In other words, the math had to be easy enough for machines to crunch.

The consequence? Economists leaned into simplifications they knew were unrealistic (representative agents, rational expectations, frictionless markets) because at least those models could be solved, simulated, and dressed up to look scientific. The sheer convenience of solvability gave these models prestige, even as their assumptions made no sense outside the seminar room.

The problem is that the real economy isn’t smooth (duh).

It’s jagged, networked, unstable. Shocks cascade and feedback loops magnify tiny inputs into full-blown crises. People don’t behave like cannonballs midair; they panic, herd, cheat, or click on dark-pattern ads.

Optimising a “utility function” doesn’t tell you much about why a housing bubble metastasises into a global crisis, or why the same bubble pops harmlessly in one sector but topples banks in another.

In other words: Newton’s maths was useful because it was solvable, not because it was true.

And when solvability becomes the criterion for success, you end up with a discipline that strives for elegance, but not explanation.

The Rise (and Narrowing!) of Econometrics

If so-called Newtonian calculus gave economics its aura of science, econometrics was supposed to give it its empirical backbone. (Econometrics is the branch of economics concerned with the use of math and statistical methods in describing economic systems).

The idea was simple enough: stop spinning elegant theories in the air, and instead measure the real world.

Easy, right? Well…

In its early days, econometrics aimed big.

Myself and my econometrics best friend, Prof. Guillaume Pouliot, discussing stochastic optimization at University of Chicago, c. 2015, as we did several times a week in the presence of too much wine and beer.

Postwar economists like Jan Tinbergen (the first Nobel laureate in economics, 1969) and Lawrence Klein (who later won the Nobel in 1980) tried to estimate entire systems: supply and demand, consumption and investment, expectations and prices. The dream was to build models that captured the whole economy, equations interlocked like gears in a machine. You could feed in data, run the model, and out would pop a forecast.

But… It didn’t quite work.

The models were fragile, the assumptions shaky, and the forecasts often embarrassingly shit. By the 1980s, Robert Lucas and others were hammering them for failing to account for how people change behaviour once they know what policy is coming.

So the field pivoted. Beginning in the 1990s and accelerating through the 2000s, economists launched what they proudly call the “credibility revolution.” The goal wasn’t to model everything, but to pin down something (literally anything) with real causal credibility.

Enter the world of identification strategies, natural experiments, and randomised controlled trials (RTC).

Want to know the impact of class size on learning outcomes? Compare schools where a random policy capped classes at 25 to those with 30. Want to measure the returns to education? Use the distance to the nearest college as an “instrument.”

The new gold standard wasn’t sweeping system models; it was clean causal inference on narrow questions that are so small, they are seemingly irrelevant to you or I.

And it… worked. At least in terms of professional status.

Top journals today are packed with papers that exploit some quirky policy change, natural experiment, or field trial to produce a razor-sharp estimate.

The rise of randomised controlled trials (RCTs) in particular turbocharged this trend. An RCT is the same method used in medicine to test a new drug: randomly divide people into a treatment group and a control group, then compare outcomes. A/B testing at its finest. Economists imported this approach into villages, schools, and welfare offices, randomly assigning who gets microloans, who receives mosquito nets, or which classrooms get more teachers.The results were publishable, tractable, and fundable.

A perfect recipe for career advancement!

By the 2010s, this approach had conquered the discipline. Development economics, once a backwater of sweeping (and often vague) theories, became the jewel in the crown of “rigorous” economics. Esther Duflo (MIT), Abhijit Banerjee (MIT), and Michael Kremer (Harvard) won the 2019 Nobel Prize for their pioneering use of RCTs in development (poverty), cementing the idea that the field’s future lay in small, clean experiments rather than big, messy systems.

Banerjee, Duflo and Kremer, 2019

The irony? This shift gave economics more prestige than ever, and proof that it could do science “properly.”

But… It also meant the discipline became very precise about very small things. We can say with confidence how much test scores rise if you give kids free glasses, or whether offering hand sanitiser at a factory entrance reduces sick days. Useful, yes. But these methods tell us almost nothing about shit you or I really care about, like why financial crises cascade, or why bubbles sometimes spread and sometimes fizzle.

In other words, econometrics solved the “rigour” problem by sidestepping the “relevance” problem.

Instead of making the big models better, it made the big questions smaller, and left the systemic blind spots wide open.

Why the Field Hasn’t Been Disrupted

If everyone agrees Newtonian calculus is outdated, and econometrics too narrow, then why hasn’t economics blown itself up and rebuilt from scratch?

The answer is depressingly simple (and one that economists certainly understand): incentives! Namely:

Institutional lock-in: Top journals love elegant theorems and clean identification strategies. Complexity models, agent-based simulations, or messy system-wide analyses? Too hard to referee, too uncertain, too unfashionable. So they get sidelined and mostly go unpublished.

Career incentives: Graduate students don’t need to solve the financial crisis; they need three publishable papers about a footnote on a footnote on a footnote. That means reaching for what journals want, not what reality needs.

Policy comfort: Politicians don’t want fancy “stochastic agent-based simulations” that say “it depends.” They want neat equations they can print on a Treasury memo, preferably with Greek letters that look scientific, that say YES or NO.

Cultural prestige: Within the discipline, proving a theorem is still treated as higher status than describing the messy truth. Math as aesthetics, not as explanation.

This brings us back to the oldest running fight in the field: is economics a science or not?

I may have to go into hiding after writing this, but…

Economists desperately want the prestige of physics: the aura of natural laws with the authority of prediction. That’s why they lean so hard on Newtonian calculus and associated models (like DSGE, for those in the know). These equations give the illusion of precision: an economy as orderly as planetary orbits.

But, and importantly: unlike physics, economics can’t run controlled experiments on parallel universes.

It studies what are called “reflexive systems”: systems where people change their behaviour in response to the model itself. Tell everyone the economy is overheating, and they adjust. Predict a crash, and you might cause one. The map changes the territory.

This is waaaay more complicated.

The result is a big, fucking mess.

What economics needs: high-dimensional optimization models

Economics now finds itself as a field which is too stylised to be descriptive, too fragile to be predictive, too mathematical to be dismissed as “just social science,” but not mathematical enough to yield durable laws.

And here’s the kicker: the profession knows all this!

Yet because of journals, career pipelines, and the seeming prestige of theorem-proving, the Newtonian + econometrics combo is self-reinforcing.

Even when it fails catastrophically (cough 2008) the solution is usually “add more Greek letters” rather than “change the paradigm.”

Also, and finally (but pretending I didn’t say this), most economists can… how should I put this?

They cannot actually do the mathematics required to ask and answer the real questions of the economy appropriately.

Think I’m lying? See the very first line of the controversial FT article itself, where the author actually admits this:

The first term of my master’s degree in economics was an alarming experience. The econometrics was bewildering. The macroeconomics was even more mysterious. Everything was drenched in incomprehensible mathematics — or, to be more honest, maths that I could not comprehend.

What the FT Missed: The Alternative Frontiers

So! Where does this leave us? Well, let me elaborate on a crucial point of the ongoing war between economists right now. What the article failed to describe is that the problem isn’t math itself. It’s the wrong math.

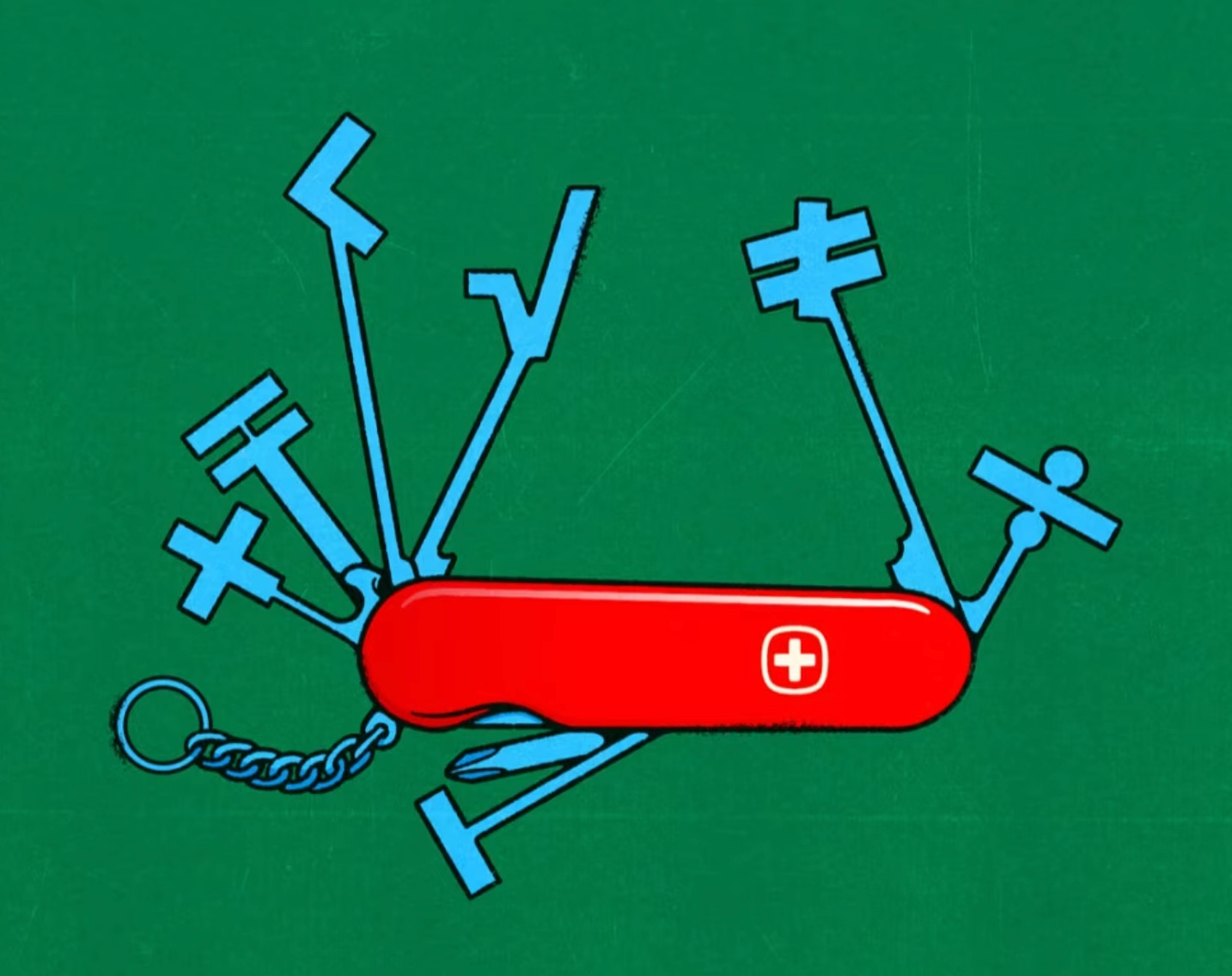

Economics doesn’t need to ditch equations and go fully vibes-based. It needs to broaden its toolkit beyond Newton’s chalkboard.

And in this new, futuristic toolkit, tour frontiers stand out all already in use elsewhere, all better suited to the messy reality of modern economies- three of which are the exact areas that I came from!

Complexity & networks

Financial crises don’t look like equilibrium failures; they look like contagions. One bank’s loss spills into another, a supply chain failure in one region paralyzes production in another. This is network math, borrowed from physics and ecology, where shocks spread through interdependent nodes. Complexity theory asks not “what’s the optimal outcome?” but “what patterns emerge when everything is tangled?”Agent-based models

This is actually what I specialized in for many years as an applied statistician moonlighting as an engineer! The “representative consumer” is a useful classroom toy, but in real life people are not average, and they don’t act in lockstep. Agent-based modelling simulates millions of heterogeneous actors (households, firms, banks) interacting under different rules. Sometimes nothing much happens; sometimes a tiny nudge creates cascading failures. It’s messy, but it looks a lot more like the real world than a utility function on a blackboard.Big data + machine learning

Again, I did a ton of interesting work here, in multidimensional optimization. Traditional economics often sneers at prediction, preferring “explanation.” But in a volatile system, prediction is explanation. Machine learning models, which chew through non-linear and high-dimensional data, can capture patterns standard regressions miss. They won’t hand you a tidy theorem, but they can flag fragilities in financial markets, or forecast which supply chains are about to crack. Specifically, my work here created machine learning models for hedge funds.Interdisciplinary borrowing

Economists don’t need to reinvent the wheel — they just need to steal shamelessly. Epidemic models capture contagion far better than comparative statics. Ecological models of resilience explain why some systems bend while others collapse. Engineering teaches redundancy and fault tolerance, concepts absent from DSGE models but vital to actual economic stability. My personal experience here was creating ecological models of squirrels hiding acorns (!) to inform robotics behaviors.

So, the FT column was right to say economics needs more maths, not less.

But the real story isn’t that the field is too “mathematical.” It’s that it’s stuck in the wrong century’s mathematics, clinging to calculus when the real action is in networks, simulations, and machine learning.

Who’s Doing the Work Already?

The good news is that all these alternative approaches aren’t just theoretical, they’re already being pushed forward, often from the margins of the field.

My hero, the father of Complexity Economics: W. Brian Arthur

It started with a man I consider to be my intellectual hero: W. Brian Arthur, often called the father of complexity economics. A Belfast-born economist who spent much of his career at the Santa Fe Institute after Queen’s University of Belfast (my alma mater!), Arthur argued that economies should be understood as complex adaptive systems: constantly evolving, driven by feedback, and full of path dependency.

His work on increasing returns and network effects in the 1980s laid the groundwork for how we now think about tech monopolies, lock-in, and the unpredictable dynamics of innovation. Others that I love include:

Jean-Philippe Bouchaud

My other hero (and a genius whose Substack I actually happen to run!). A physicist by training, hedge fund scientist by trade, Bouchaud has spent decades poking holes in the orthodoxies of finance. He was among the first to argue that the Black-Scholes model (the foundation of modern options markets) dangerously underestimated violent price jumps and feedback loops. More recently, he’s championed the inelastic markets hypothesis: the idea that stock prices don’t just reflect “fundamentals” but are permanently reshaped by flows of money in and out of markets.Doyne Farmer & the Santa Fe Institute

Farmer, an ex-physicist turned complexity economist at Oxford, he runs agent-based models (again, the things I did) of everything from financial contagion to climate risk, showing what happens when you let millions of diverse actors interact instead of assuming one “representative agent.”Outside fields

Often the most radical ideas come from outsiders: physicists, data scientists, ecologists. They’re (luckily!) not trapped by economics’ shitty gatekeepers and more willing to treat the economy as a complex system rather than a theorem-proving exercise. Many of their insights (on contagion, resilience, and emergent behaviour) arrive decades before economists reluctantly catch up.

The Stakes

Alright, so in case you’re still reading… Why does any of this matter outside the faculty lounge/ violent group chat?

Because the models economists still use shape the policies that govern your mortgage rate, your pension fund, and the stability of the global financial system!

The trouble is that Newtonian models are useless at explaining crises. They didn’t anticipate the crash of 2008, they can’t tell us why the dotcom bust stayed confined to tech stocks, and they shed little light on why some bubbles spread across the economy while others pop in isolation.

Econometrics, too, has its own blind spots. It is brilliant at the small stuff, but it is nearly silent on systemic collapse. The discipline has remained disturbingly vague about the structural fragilities that actually destabilise economies.

And yet, the tools to do better already exist. Complexity theory, agent-based modelling, and network analysis can map fragilities before they metastasise. They can reveal where shocks are likely to cascade, where resilience is thin, and where intervention might prevent disaster.

But for now?

These approaches remain fringe (used at hedge funds, in physics labs, or in pockets of heterodox research) while mainstream economics– and our economies!-- continue to run governments on 17th-century maths.

The stakes could not be clearer: as long as the profession clings to Newton’s toolkit, it risks steering policymakers with maps that are precise, elegant, and…

Dangerously wrong.

The Punchline

The Financial Times column that set this whole debate off was… half right?

Economics doesn’t need less math. The real problem is that it fetishises the wrong math, while ignoring the messy, modern mathematics that might actually explain how crises unfold.

Worse, the discipline has locked itself into this dumb publishing game where elegance is rewarded and careers depend on proving theorems no policymaker will ever use!

If economics doesn’t break its Newtonian addiction, it faces a bleak future.

It will continue to dazzle with models that look scientific but fail at the moments when science is needed most. And it risks becoming the polite Latin of the social sciences: elegant, precise, and…

Completely, totally and utterly useless.